The Future of Generative AI: Where Are Large Language Models Headed?

- Madhuri Pagale

- Mar 19, 2025

- 5 min read

Updated: Mar 25, 2025

Written By: Girish Kanoje

Rushikesh Sadafule

Pouras Suryawanshi

Large Language Models (LLMs), in particular, have seen an unparalleled development in generative AI in recent years. Rapid developments in high-performance computing, deep learning architectures, and large scale training datasets have improved the capabilities, context awareness, and usability of LLMs. However, efficiency, personalization, and ethical AI will be the main focuses of the upcoming wave of AI development. Let's examine the major themes influencing generative AI's future.

Smaller, More Efficient AI Models

Efficiency will be prioritized in the future, whereas current trends emphasize scaling up models for improved performance. Scientists are creating more compact, powerful models that use less processing power. Large-scale AI deployment will be made possible by methods like quantization, distillation, and sparse computation, which will lower business costs and increase access to AI globally. Although scaling up models for improved performance has been the current trend, efficiency will be prioritized over sheer size in the future. Scientists are working hard to make AI models faster, lighter, and using fewer resources. Methods like: • Model quantization (reducing precision in computations to lower memory footprint)

• Knowledge distillation (transferring knowledge from large models to smaller ones)

• Sparse computation (optimizing how AI processes information)

Customized AI Helpers

In the future, LLMs will develop into genuinely customized AI companions rather than merely generic information retrievers. AI will be able to:

Recall previous interactions

Adjust to user preferences and behavior

Provide context-aware responses in real-time thanks to developments in memory-based architectures and retrieval-augmented generation (RAG).

As a result, AI will become an intelligent digital assistant that can assist users with tasks like content creation, research, and proactive recommendations.

Capabilities in Multiple Modes

Beyond just text, generative AI will also produce 3D models, audio, video, and images. Prominent AI models are already incorporating multimodal capabilities, including Microsoft's Copilot, DeepMind's Gemini, OpenAI's GPT-4, and Meta's LLaMA. Complex multimedia content will be seamlessly integrated by future AI, transforming creative industries like gaming, filmmaking, and design.

Enhanced Reasoning and Common-Sense Understanding

Despite their impressive capabilities, current LLMs struggle with logical reasoning and real-world common sense. Future AI advancements will focus on:

Improved reasoning engines for better problem-solving

Understanding causality rather than just correlation

Neurosymbolic AI, which combines deep learning with symbolic reasoning

These enhancements will make AI more reliable in decision-making tasks across sectors like healthcare, finance, and law.

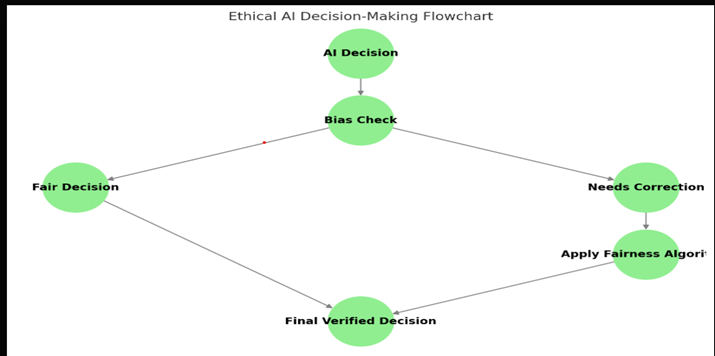

Ethical AI and Bias Reduction

Bias, misinformation, and ethical concerns remain major challenges for AI. Future innovations will focus on fairer, more transparent AI. Ethical AI development will prioritize:

• Bias reduction through improved training data and auditing mechanisms

• Explain ability and accountability for AI decisions

• Regulatory compliance to align AI with ethical standards

AI Agents and Autonomous Systems

LLMs will evolve into autonomous AI agents capable of performing complex tasks with minimal human intervention. These agents will integrate with software tools, APIs, and automation systems, enabling them to assist in:

• Coding and software development

• Financial analysis and legal assistance

• Scientific research and medical diagnostics

Democratization of AI

AI development is becoming more decentralized with the emergence of open-source LLMs (such as Mistral AI and Meta's LLaMA). Open AI models improve accessibility, decrease monopolization, and promote quicker innovation. Powerful AI will eventually be available to individuals and small enterprises without the need to rely on tech behemoths.

Human-AI Collaboration

AI is not to replace humans but to boost human productivity and creativity. From co-authoring books to helping doctors in diagnostics, AI will be a cognitive amplifier. The emphasis will change from automation to augmentation, with AI being an asset in several industries.

AI Regulation and Governance

With AI models getting increasingly more powerful, governments and institutions are demanding tougher AI regulations. We can anticipate:

• International frameworks for AI governance

• Ethical codes to avoid abuse

• Stronger regulation of AI content

The Future

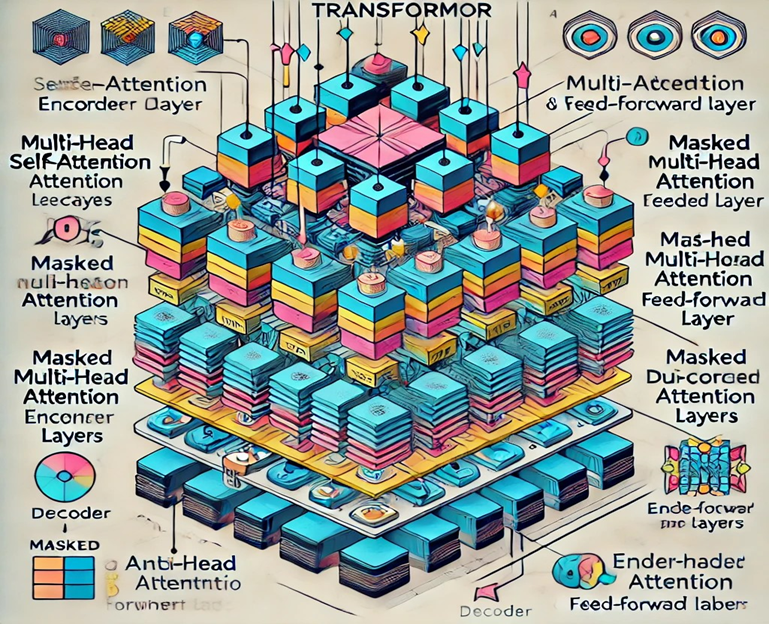

Large Language Models (LLMs) are poised to revolutionize artificial intelligence by moving away from text-based interactions and toward more dynamic, multimodal, and self-governing systems. LLMs currently use the Transformer architecture, which is powerful but has issues with scalability and high computational demands. More effective substitutes, such as Sparse Transformers, Mixture of Experts (MoE), and State Space Models (SSMs), which optimize computational efficiency yet maintain performance, will, nevertheless, become more prevalent in the future. These developments will guarantee that AI models can respond in real time without using excessive processing power, handle longer contexts, and process information more intelligently.

Significant changes are also occurring in training methods. Conventional models are static and resource intensive because they need to be thoroughly retrained in order to update their knowledge.

Continuous learning, in which models can change over time without requiring total retraining, is the direction of the next wave of AI development. In order to make LLMs more responsive to real-time data and maintain their relevance without continuously consuming enormous amounts of computational power, techniques like Retrieval-Augmented Generation (RAG) will be essential. Furthermore, techniques like adapter layers and Low-Rank Adaptation (LoRA) will enable fine-tuning with low energy consumption, increasing the sustainability and scalability of AI.

The way LLMs engage with the outside world is undergoing a significant change. While text has been the main focus of these models up to this point, future versions will smoothly incorporate speech, vision, and even autonomous actions. As a result of this change, AI assistants will be able to do more than just reply to text messages; they will also be able to comprehend voice commands, analyze images, and even perform actions inside of apps or smart environments. This is especially important for sectors like healthcare, where AI-powered diagnostics will be able to process patient histories and medical images to make more precise recommendations.

Memory management is another area undergoing radical improvements. Current LLMs have limited context windows, meaning they struggle to retain long-term knowledge. Future models will leverage external memory stores, hierarchical memory systems, and vector databases to recall past interactions with greater precision. This will make AI interactions feel more human-like, where the model remembers previous conversations and adapts accordingly, rather than treating each session as an isolated event.

The way AI is deployed is also shifting. Today, most LLMs operate in the cloud, requiring an internet connection and significant server-side processing. However, advancements in hardware optimization and quantization techniques are enabling AI to run efficiently on local devices. This move towards edge AI means that LLMs will soon function on smartphones, IoT devices, and embedded systems, reducing latency, improving privacy, and making AI-powered tools more accessible in areas with limited connectivity.

As LLMs become more powerful, ethical concerns and security challenges are becoming increasingly important. Future developments will emphasize bias mitigation, ensuring AI-generated responses are more balanced and fair. Advanced security measures will also be implemented to combat potential threats like misinformation, AI-generated cyberattacks, and deepfake content. Techniques such as digital watermarking and adversarial defense models will play a crucial role in maintaining AI integrity.

The next decade will mark a significant transformation in AI, with LLMs moving beyond simple text prediction to becoming dynamic, context-aware, and action-oriented systems. These advancements will not only improve efficiency and accessibility but also unlock new possibilities for human-AI collaboration. The challenge ahead will be balancing innovation with ethical responsibility, ensuring AI remains a force for progress while addressing the risks that come with it.

Conclusion

The future of generative AI is filled with possibilities. From smaller, efficient AI models to multimodal, personalized assistants, AI will reshape industries and human-computer interactions. However, ethical considerations, regulation, and responsible AI development will be crucial in ensuring AI’s safe and beneficial evolution. By striking the right balance between innovation and ethical AI governance, we can unlock the full potential of next-generation LLMs.

Excellent

It's a really fantastic blog. Well researched.

Great work

Informative. Nice job. Keep it up👍🏻

Nice information

Good