GPT-Powered Chatbots vs. Traditional Rule-Based Systems

- Madhuri Pagale

- Mar 19, 2025

- 12 min read

Blog by: - Mayur Chaudhari

Harshal Patil

Akshay Dhere

We are living in exciting times— in the context of technology, at least. Over the past few years, a form of technology called as Generative Artificial Intelligence (GenAI, in short) has made every person with an electronic gadget, take a liking to it. With the release of ChatGPT (based on GPT-3, at the time) by OpenAI, back in 2022, it was made clear to the average layperson the potential of this technology and prompted the tech industry to double up on investment and research on it.

But before the advent of chatbots that used GenAI, based on Large Language Models (LLMs) and Natural Language Processing (NLP), we had rule-based chatbot systems; which were not even half as smart as their GPT-powered counterparts. Yet, they had their own pros and cons. In this article, we will step into the fascinating world of AI chatbots and its two central races, which help your inner child reconcile their dream of living with intelligent bots.

Blog Walkthrough

How They Chat: The Basics

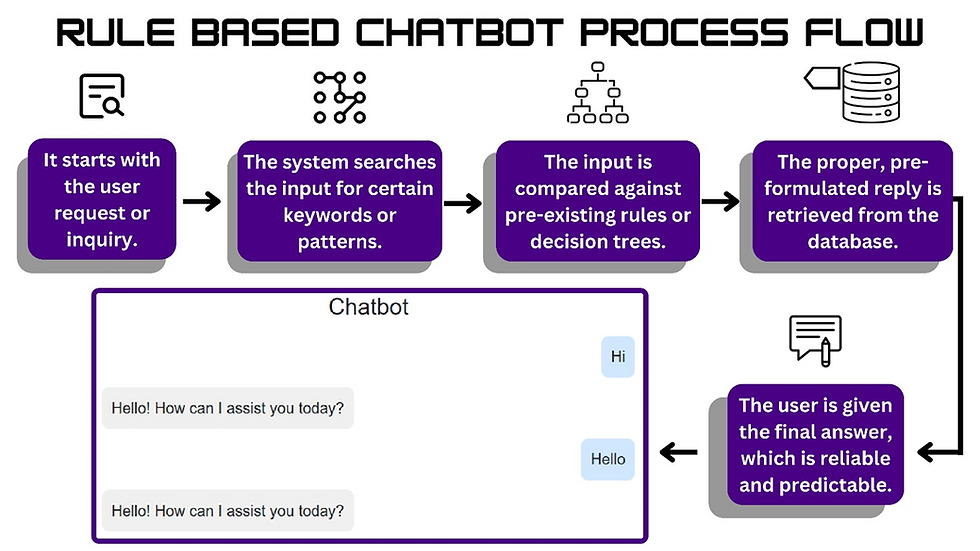

Think of rule-based systems as an AI that has been taught how to answer to very particular questions-- or how to react to very particular conditions. Such a system cannot learn more than what knowledge is hardwired (programmed) into it.

On the other hand, a chatbot based on neural networks undertaking the process of machine learning or deep learning, has been trained on certain knowledge, but can learn more without needing to be reprogrammed.

In rule-based chatbots, when you enter a query or a prompt, the program checks its database to look for the best response to the set of words you typed in-- kind of like it has memorized the response instead of thought it.

Whereas GPT-powered chatbots, do a whole different thing. In layperson terms, they will first convert your response into numbers and then pass them through their neural networks; where many operations are performed on them to produce some other set of numbers-- which are then converted back to the textual response.

If the response is not what is expected, then the neural network will change the operations that it performs to produce a better response. This process seems closer to what one could call thinking and learning.

This is also why rule-based systems are employed mainly in tasks that are simple and do not need novel responses, and why they cannot answer to questions that “out of the box” for themselves.

• Basic Component Set of a Rule-based system: -

1) Rules: These are like the laws that the system follows, programed using conditional statements to associate a condition or a prompt, with an action or a response.

A rule is logically represented as follows: -

IF such and such condition THEN such and such action

OR

IF such and such prompt/query THEN such and such response

2) Knowledge Base: This is the main database that stores all the rules, conditions it might encounter, and the actions that it shall take. This database must be manually modified; reprogrammed and restructured to update the system's knowledge or its so-called intelligence.

3) Inference Engine: This is the piece of software that performs the task of fetching the rules, conditions and actions from the knowledge base and linking them together. It does this based on the user’s entry to the system.

4) Working Memory: This the main memory that retains the ongoing tasks by storing the rules, conditions, and actions, temporarily. It is being constantly updated as the inference engine works on newer data from the knowledge base; whenever newer prompts must be addressed after the completion of the previous ones.

5) A User-Interface: The application of a rule-based system in a chatbot would obviously require a sound user-interface to type in prompts, receive responses, edit, and comment on them, and check chat history, satisfied using GUI.

A rule-based chatbot traverses something called as the decision tree (Fig.2), where each node corresponds to a specific next action/response based on the previous condition/prompt.

A GPT-powered system is a lot more complex than a simple Rule-based system and thus has a far complicated set of core components. GPT is an LLM; a Large Language Model— fancy term for something that can process natural human languages in real time. Which, in technical jargon, is called NLP (Natural Language Processing).

• Basic Component Set of a GPT-powered system (here chatbot): -

1. BPE Tokenizer: Tokenization is the initial step in NLP, wherein huge input data is divided into smaller units referred to as tokens (words or characters). Raw input is tokenized using Byte-Pair Encoding (BPE) by GPT.

2. Neural Network:

a) Vectorizers: Token data is transformed into numerical data (vectors) in the form of matrices.

i) Token Embedder: First transforms tokens into numerical form.

ii) Positional Embedder: Imposes position information to maintain word order in parallel processing.

iii) Contextual Embedder: Uses context from nearby words (e.g., "river bank" and "money bank").

b) Transformer Blocks: Central elements that generate responses through self- attention.

i) Causal Multi-Head Self-Attention: Processes contextual information for every token.

ii) Feed-Forward Neural Networks: Perform non-linear transformation.

iii) Residual Connectors & Layer Normalizers: Make training stable and preserve gradient flow.

3) Output Projection Layer: Projects the final output to the vocabulary of the model.

4) Softmax Function Layer: Generates a probability distribution over the vocabulary, outputting the most probable response tokens.

An intuitive GUI, such as ChatGPT's, improves usability with functions including copying, liking, editing, and commenting on responses.

Where they excel? & Where they lag?

Let’s discuss Strengths and limitations of both GPT v/s Rule based chatbot...

• Strengths of GPT based chatbots -

GPT based models can generate more human like response which will vary each time and feel more natural.

GPT based model due to their huge input data set can easily adapt to various topics and different language styles.

GPT model remember context of previous chat making conservation in line with the topic.

Can handle diverse topics and languages making chats more fluid and versatile.

GPT model can provide better human like chat experience.

• Limitations of GPT based chatbots –

GPT based model may produce hallucinated or off-topic response that are less precise.

They require more resources such as large data sets, more processing power, and fine tuning for better performance.

Sometimes GPT based model can be less predictive.

GPT based models may lose context during long conversations or complex topic discussions.

They need additional step for fine tuning for better performance, or occasional inconsistencies may confuse user.

• Strengths of Rule based chatbots -

As rule-based system are trained on specific set of data they can give predictable answers

Rule based system can work reliably for well-defined tasks according to the data set which is provided to it.

Delivers straight forward answer for simple queries, they give consistent output for given input

Rule based system require less resources as they don’t require heavy computational power

Responses in rule-based systems are predefined making them easier to audit, monitor and adjust as per need.

• Limitations of Rule based chatbots –

Rule based system struggle with questions which deviate from its input dataset, pre-set values which limits its usefulness for varied conversations.

Rule based system cannot learn like GPT based systems through experience without manual updates.

Rule based systems lack to capture essence of human conversation which is natural flow which make interactions feel more robotic.

Rule based system require many changes to include new scenario or query this can also affect the response generation speed of chatbot.

Rule based system treats each input as individual making it harder to track context of previous response.

Comparison Parameters of Both Systems

Some important factors[1][2] to be considered for comparing both chatbots on technical ground include:

1. Response time

2. User satisfaction score

3. Fall back error

4. Engagement rate.

Let's discuss these topics in detail –

1. Response Time -

Response time is the time elapsed between submitting query to the chatbot and generating a response.

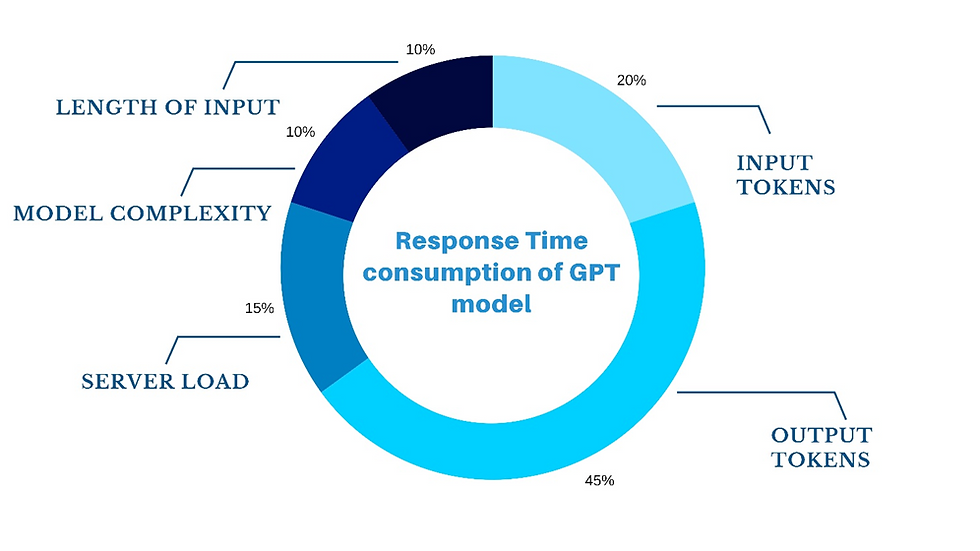

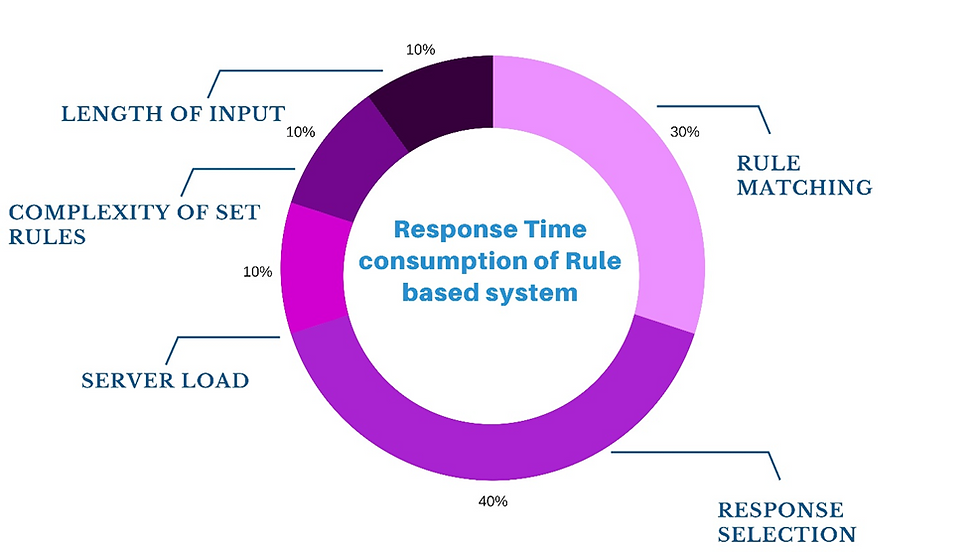

In GPT based chatbot, response time depends on various parameters such as number of input and output tokens, sever load, length of the input and model complexity meaning number of layers and parameters.

In rule-based systems, response time tends to be less because the chatbot is just looking for a pre-programmed response according to keyword matching or decision tree reasoning without any complicated calculations.

![Fig.5: Here is the graphical representation of API response time of different GPT models[3]](https://static.wixstatic.com/media/6c997f_48f5a99a1aba48f89c2b5a3ee60b1c60~mv2.png/v1/fill/w_980,h_656,al_c,q_90,usm_0.66_1.00_0.01,enc_avif,quality_auto/6c997f_48f5a99a1aba48f89c2b5a3ee60b1c60~mv2.png)

As seen in the graph x-axis represents number of tokens in a request and on y axis is the time taken by model to generate a response.

For smaller no of input tokens ranging from 100 to 2000 all models show shorter response time which is below 1 second

For input tokens greater than 50,000 there seen a sharp increase in response time of almost all model.

Though GPT 4.0 mini shows smaller rate of increasing than GPT 4.0 which takes well over 8s to generate output response.

This is due to GPT 4.0 requires greater processing and due to large context window and greater complexity.

On the contrary response time for rule-based system is very less as it only scans user input and tries to match it with pre-defined rules in the code. Response time for rule-based system is typically milliseconds or few seconds. Major time is consumed for response selection as it scans through entire list of rules which are pre-defined.

2. User satisfaction score –

A numerical indication of how much users like the chatbot's responses, commonly gathered via post-interaction feedback e.g through thumbs up down or rating scale.

• In GPT based chatbot satisfaction depends on model’s ability to process user input, keeping flow of conversation, tone in the response and relevant output. While misunderstandings and hallucinated responses may lower the satisfaction score of models.

• In rule-based systems satisfaction relies on whether the predefined rules accommodate enough instances of user queries. Short responses can be frustrating if user's input doesn’t conform to any programmed rule.

![Fig.8: showcases a similar Customer satisfaction score meter by Appinio.[4]](https://static.wixstatic.com/media/6c997f_9dae5c71f27e48998234e70cd3a842b4~mv2.png/v1/fill/w_980,h_655,al_c,q_90,usm_0.66_1.00_0.01,enc_avif,quality_auto/6c997f_9dae5c71f27e48998234e70cd3a842b4~mv2.png)

3. Fall back error –

Fall back error occurs when a chatbot fails to understand or correctly respond to user request. This condition may arrive due to ambiguous inputs, or complex queries. This leads to default or incorrect response such as “Sorry, I didn’t understand that”.

• GPT Chatbots: Fallback errors happen when inputs are ambiguous, the model has been trained on limited contexts, or model hallucinations. Fine-tuning and reinforcement learning decrease these errors. When inputs are ambiguous, GPT will generate similar fallback responses such as "Needs more clarification" because of its deep learning algorithm. But GPT has a broad coverage of topics so that such errors do not happen often.

Rule-Based Systems: Fallback occurs when input fails to match pre-specified patterns, leading to a "no response found" failure. Although large rule sets minimize errors, they cannot accommodate all conversation variations. Rule-based systems provide limited fallback responses and will fail if the subject is beyond their learning capabilities.

This can be observed in below figures (Fig.9 and Fig.10) when a rule-based system is given random data it generates the same output whereas a GPT based chatbot understands that a random data has been given as input and prompts the user to ask correctly!

4. Engagement rate –

• In chatbots based on GPT, participation relies on the quality of response, whether the chatbot can sustain a long conversation, and personalization. Functions such as memory, sentiment, and adaptive learning can enhance participation.

• Engagement in rule-based chatbots tends to be lesser as interactions come in a prescribed order, curbing user participation options. Reason for lower engagement rate is also due to more robotic tone in the response.

Engagement rate is usually calculated using further formula –

Both categories of chatbots possess advantages and disadvantages. GPT models are good at contextualization but could experience latency, while rule-based models respond immediately but are not flexible. The perfect chatbot should offer low latency, high accuracy, and a measure of interactivity so it performs efficiently while providing a seamless user experience.

Let's proceed to the second part, wherein we discuss the larger applications of GPT chatbots and their replacement of rule-based systems across finance, medicine, science, technology, and research. We'll see how these models solve real-time issues with efficient solutions and contextual understanding.

The Journey and Road Ahead for GPT based Chatbots

The first integration of GPT models as a chatbot was done by OpenAI in 2022 when they introduced the ChatGPT 3.5 Model as a website for people to use.

Soon it gained popularity and flooded the internet with its various application and integration capabilities. When the company launched this, they also Released API support for these Chat based GPT models where a user can Integrate these to any application they are developing.

The GPT models got much stronger and relevant with each release and currently as of 2025 these models have mastered NLP and are processing user requests efficiently and in real time.

As this was released an entire new way of approaching certain fields emerged, the traditional hardcoded chatbots with fixed scope were being replaced by GPT chatbots.

Since 2022 the most effective implementation of these chatbots has been observed in Customer Support Domain of service-based industries.

Some Applications are as Follows: -

1. Customer QnA and Support Systems

Many customer service platforms like Intercom and Zendesk are integrating and adapting GPT-based chatbots to improve support operations and 24/7 services to customers globally.

Also, various startups and established enterprises in sectors like finance, healthcare, and e-commerce are integrating these models into their customer service and internal operations.

AI chatbots which generate real time responses to customer queries for food orders if you ask these bots questions like

Where is My Order?

It establishes contact with server and fetch the latest location of the delivery partner and update a response to user.

How long will it take?

As the chatbot is able to handle real-time databases and perform necessary calculations it can predict the exact time of delivery and as well as reassures the customers with latest information regarding their order.

Or even why is the delay occurring?

The chatbot checks and tells if either the order is ready or not ready or the predictive approach like you should contact the dealer or the delivery partner in case of any loss of latest data.

If we tried solving these issues in a traditional based system it would take a lot of efforts and program to generate the accurate results. Sentiment Analysis would also be not accurate as the chatbot wont contextually understand customer queries and their emotions in chat.

With GPT based techniques the chatbot actually understands customer’s emotions and requirements. With NLP it provides a response that is convincing and as well as Appealing by also keeping a good balance of accuracy and reality.

Also, Amazon introduced "Amazon Q"[5], a chatbot developed for enterprise use, based on Amazon Titan and GPT generative AI. Announced on November 28, 2023,

• Amazon Q assists in troubleshooting issues in cloud applications or group chats

• It is a corporate AI assistant which channelizes workflow and also helps employees to develop complex real-world applications and also analyze data efficiently.

• It is integrated into the Amazon Web Services management console.

2. Advanced Filtering Sorting in Customer Support

It is a really appreciable feature of GPT based chatbots in E-commerce websites like Amazon, Flipkart when fed the E-Commerce Platform Data and taken the customer requirements, the GPT bot would produce an Output based on relevant products which saves time of customer to manually select these filters.

Also, a very detailed and precise comparison of products which is unbiased and with actual statistical data can be generated. People favor these comparisons than the reviews which may or may not be biased. Scenarios can be created where a particular product is being modified according to inputs.

3. The Medical Field

Google’s Med-Gemini was tested for various 2-D, 3-D image analysis and disease prediction, the user pointed towards the image of organs using mouse and the bot analyzed that data efficiently identifying the organ and its inference based on a healthy person’s organ these analysis techniques would effectively start helping the medical sector by accurate diagnosis and also give discharge summaries which help for further references to the patient.

It has a 91.1% score on MedQA Benchmark.[6]

As these models are being fine-tuned everyday by the users their efficiency and predictability is increasing day by day leading to their usage in everyday life and becoming an indispensable part of our daily routines.

4. An implementation of AI based chatbot in a website using API Key

A Chatbot Was Implemented by our team using TogetherAI's[7] free API key, a local python flask server [8] was set up to implement a basic window of chatbot which had a query window with a submit button and clear chat button.

This particular Chatbot uses Llama-3.3-70B-Instruct-Turbo-Free a transformer architecture-based AI model which can be integrated to any use cases for user centric applications.

The Sample code for the chatbot server is as follows: -

from flask import Flask, render_template, request, jsonify

from together import Together

app = Flask(__name__)

client = Together(api_key="your_API_Key_generated_from_together_AI_website")

chat_history = [{"role": "system", "content": "You are a chatbot and AI assistant."}]

@app.route("/")

def home():

return render_template("index.html")

@app.route("/chat", methods=["POST"])

def chat():

user_message = request.json.get("message")

if not user_message:

return jsonify({"error": "No message received"}), 400

chat_history.append({"role": "user", "content": user_message})

response = client.chat.completions.create(

model="meta-llama/Llama-3.3-70B-Instruct-Turbo-Free",

messages=chat_history,

max_tokens=256,

temperature=0.7,

top_p=0.7,

top_k=50,

repetition_penalty=1,

stop=["<|eot_id|>", "<|eom_id|>"],

stream=False

)

response = response.choices[0].message.content

chat_history.append({"role": "assistant", "content": response})

return jsonify({"response": response})

if __name__ == "__main__":

app.run(debug=True)

The Chatbot contacts the Llama servers and takes that generated output and displays it on the template index.html that is being run by our server

As shown below in Fig.13 the responses are being generated by the chatbot. This chatbot can be tuned as per user requirements for further usage.

Conclusion

As this blog draws to a close, we invite readers to delve into the world of GPT models and try using free APIs to see their practical uses. We discussed their increasing superiority over rule-based systems, explaining how parameters play a role in accuracy.

GPT chatbots, despite changing industries, have yet to be fine-tuned with ethical guidelines. Ethical implementation will be essential for success in the changing tech environment.

References

🔥🔥🔥

Amazing

nice

Sensational Blog

Informative blog. Awesome.